Hackintosh Catalina Intel

Hackintosh EFI Pack for GA-B150M-VP, i5-6600K and Radeon RX 580. MacOS Catalina 10.15.2 - GitHub - migftw/Skylake-Hackintosh-Catalina: Hackintosh EFI Pack for GA-B150M-VP, i5-6600K and Radeon RX 580. Hackintosh Catalina not passing code HELP I tried to install Niresh Catalina on my Windows laptop (i7 - 10510U (4C/8T, up to 4.9 GHz), 16 GB DDR4-2666 MHz RAM, 512 GB SanDisk SSD, Intel UHD 620 + Nvidia GeForce MX230). Hello, My name is Saidul Badhon. Im trying to build a hackintosh build with Gigabyte H97m-d3s mobo, Core i5 4590 and 12GB RAM. My pc boots with clover. I can connect to the internet, use App store and do almost everything in mac os Catalina but gpu drivers are not working. Joined Jul 22, 2018 Motherboard Supermicro X11SPA-T CPU Intel Xeon W-3275 28 Core Graphics 2xAMD RX 580 8GB OS X/macOS 11.0.x Bootloader.

Introduction

This paper describes advancements and improvements made to the macOS* machine learning stack on Intel® Processor Graphics through Apple*’s latest Mac* operating system, macOS Catalina* (macOS 10.15). It summarizes some of the improvements Intel and Apple have made to Core ML*, the Metal Performance Shaders (MPS) software stack, to take full advantage of Intel Processor Graphics architecture. The paper also describes the Create ML feature, through which a model (mlmodel) can be created on an Intel® powered device running macOS without the need for data leaving the device.

A follow up to the Apple Machine Learning on Intel Processor Graphics paper, the information here builds on the content of this previous paper

Target Audience

Software developers, platform architects, data scientists, and academics seeking to maximize machine learning performance on Intel Processor Graphics on macOS platforms will find this content useful.

Architecture

Read this section for a recap on inference and training architecture with macOS Catalina on Intel Processor Graphics.

Core ML*

Core ML, available on Apple devices, is the main framework for accelerating domain-specific ML inference capability such as image analysis, object detection, natural language processing, and more. Core ML allows you to take advantage of Intel processors and Intel Processor Graphics technology to build and run ML workloads on a device so that your data does not need to leave it. This removes the dependency on network connectivity, security, and privacy concerns. Core ML is built on top of low-level frameworks such as MPS (Intel Processor Graphics) and accelerates basic neural network subroutines (BNNS on Intel processors) that are highly tuned and optimized for Intel hardware to maximize the hardware capability.

Metal Performance Shaders

MPS is the main building block for Core ML to run ML workloads on graphics processing units (GPUs). To target underlying GPU devices, you can write applications to use the MPS API directly. With MPS, you can encode the machine learning dispatches and commands tasks using the same command buffers that are used with metal-based 3D, and compute workloads for traditional graphics applications.

Create ML

Create ML, upgraded to be a standalone app, lets you build various types of custom machine learning models, including image classifier, object detector, activity classifier, and others. It uses transfer learning technology with Intel Processor Graphics to train models faster. Major improvements and additions were made to Create ML so you can now create and train custom machine learning models on macOS platforms that use Intel Processor Graphics. You can integrate those improvements into part of your Core ML applications.

Figure 1. macOS Catalina machine learning on Intel Processor Graphics.

Performance

Significant improvements were made with macOS Catalina on top of the earlier release, with macOS Mojave* using Intel Processor Graphics technology. As previously mentioned, with macOS Catalina we deployed an improved machine learning algorithm for key primitives, optimized how we deploy work to the underlying hardware, and more. Such improvements are a continuous process, and Figure 2 gives you an idea on where the performance gains are (see the disclaimer). The numbers in Figure 2 were generated from macOS Catalina and Mojave using a 13-inch MacBook Pro* running Intel® Processor Graphics Architecture.

Figure 2. Performance improvements using Core ML.

The denoiser model is using an Intel® Open Image Denoise trained model to denoise a high-resolution noisy image. Figure 3 shows the output running Intel Open Image Denoise through Core ML on a 13-inch MacBook Pro 2016 powered by Intel Processor Graphics Gen9 architecture. Figure 3 and figure 4 from below demonstrates the result of running Intel® Open Image Denoiser using CoreML through Intel Processor Graphics Gen9 architecture.

Figure 3. Denoised bistro.

Figure 4. Denoised Sponza.

Figure 5 shows Web Machine Learning (WebML) improvements compared to a legacy WebGL* API, with macOS Catalina on a 13-inch MacBook Pro 2016 powered by Intel Processor Graphics Gen9 architecture.

Figure 5. Chromium* open source project running with WebGL API.

Figure 6 shows running the same model using WebML implementation and performance gain that can be achieved with WebML powered by Metal Performance Shaders and Intel Processor Graphics compared to WebGL implementation running on the same hardware.

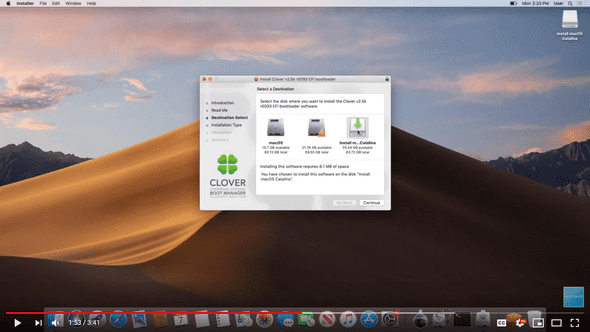

Hackintosh Catalina Installer

Figure 6. Chromium open source project running with WebML API powered by MPS.

Note The examples from Figures 5 and 6 can be found here.

Significant improvements were made to third-party applications such as Adobe Lightroom* Enhance Detail, Pixelmator Pro*, and others. The chart in Figure 7 shows an example of the improvement we deployed for an Adobe Lightroom Enhance Detail application.

Figure 7. Performance improvement with Adobe Lightroom Enhance Detail.

What's New

For full list of new capabilities and features, visit the Apple Machine Learning and Metal Performance Shaders pages for detailed information on Core ML, Create ML, and others.

With macOS Catalina, Apple and Intel improved performance of all the key neural network layers by deploying new convolution algorithms, optimizing memory usage, reducing network build time, adding batch inference support to better use the underlying hardware, including smart heuristics to deploy the best algorithm or kernel for specific layers, and more.

Batching

To keep the hardware fairly occupied, we recommend using MPS encode batch API and or Core ML batch interfaces to submit your neural network. With the new batching API, instead of sending one input to process you can send multiple inputs to process, and the hardware can schedule the work more efficiently to take better advantage of the underlying hardware. Also, the API seamlessly gets improvements when upgrading to newer generations of Intel Processor Graphics. With these approaches, the underlying driver keeps the hardware consistently occupied with the high utilization of Intel Processor Graphics execution units.

The chart in Figure 8 shows some of the performance improvements that can be achieved using the batch API.

Figure 8: Metal Performance Shader improvements with batching.

Create ML

Using Create ML, you can create your ML models directly on your macOS device running on Intel Processor Graphics. You can create ML models on the device to perform several types of tasks, including image classification, activity classification, object detection, and more.

Hackintosh Catalina Intel Hd 4000

Simple Image Classifier Using Create ML

The following simple example shows how to quickly create a custom image-classifier model using Create ML on an Intel Processor Graphics powered platform.

To create a simple “car make” classifier that can detect the differences between Tesla*, BMW*, and other cars, follow these steps:

- Open the Create ML app on your macOS machine.

- Select the model type to create. For this example, it is the Image Classifier model.

- Add a description of the model.

- Select the training data set with labels. For this training data set, you create three folders (BMW, Tesla, Other) to contain images of the car categories. The folder name is the prediction label for that category of cars.

- Select the images to be used to test the model. Use different images of the same category from the ones that we have used in our training set.

- Select Train to start the model training.

- As the above status shows, it took only two minutes to process and train the model with the training data set. When the training phase is complete, the Create ML interface gives additional information, such as the precision of the model based on the testing data set. It’s now time to test the model with some custom images the model has not previously seen.

As the following four screens show, the car models were identified correctly.

- The fifth screen above shows that the BMW car was not correctly identified. For incorrect predictions you need to adjust several knobs in the Create ML app, such as training data set, iteration, and others to improve the model’s accuracy.

- After these knob adjustments, you save the model .mlmodel for deployment with the Core ML application.

- You can now use the .mlmodel in the Core ML application to detect the differences between these three types of cars.

This example shows how Intel Processor Graphics enables CreateML, Core ML, and MPS (on macOS platforms) to simplify the training process with transfer learning technology.

For more information and all the latest features, visit Create ML.

Summary

This paper shows how the improvements that Apple and Intel made to the existing and new software stack with Core ML, Create ML, and MPS, take advantage of the underlying Intel Processor Graphics hardware.

References

Hackintosh Catalina Intel Wifi

For more information or to get started, download the tools or libraries from the following links: